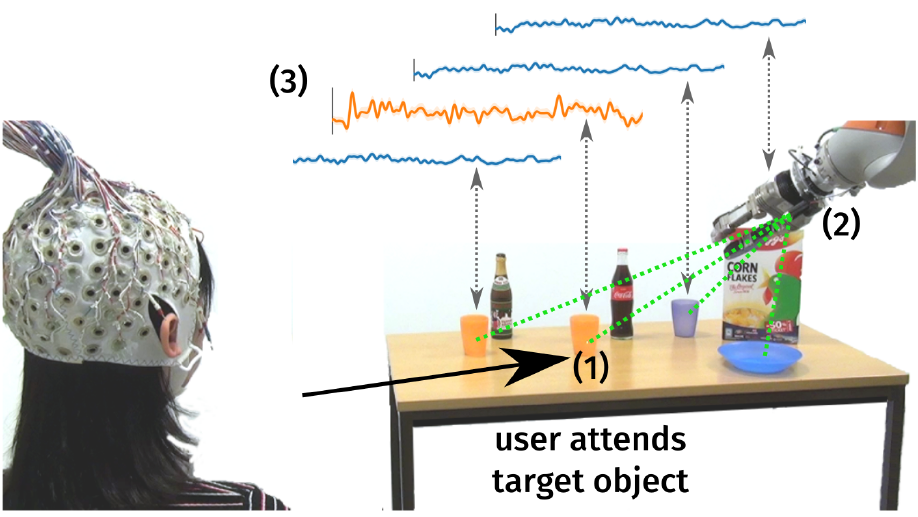

A Robust Screen-Free Brain-Computer Interface for Robotic Object Selection

Brain signals represent a communication modality that can allow users of assistive robots to specify high-level goals, such as the object to fetch and deliver. In this paper, we consider a screen-free Brain-Computer Interface (BCI), where the robot highlights candidate objects in the environment using a laser pointer, and the user goal is decoded from the evoked responses in the electroencephalogram (EEG). Having the robot present stimuli in the environment allows for more direct commands than traditional BCIs that require the use of graphical user interfaces. Yet bypassing a screen entails less control over stimulus appearances. In realistic environments, this leads to heterogeneous brain responses for dissimilar objects— posing a challenge for reliable EEG classification. We model object instances as subclasses to train specialized classifiers in the Riemannian tangent space, each of which is regularized by incorporating data from other objects. In multiple experiments with a total of 19 healthy participants, we show that our approach not only increases classification performance but is also robust to both heterogeneous and homogeneous objects. While especially useful in the case of a screen-free BCI, our approach can naturally be applied to other experimental paradigms with potential subclass structure.

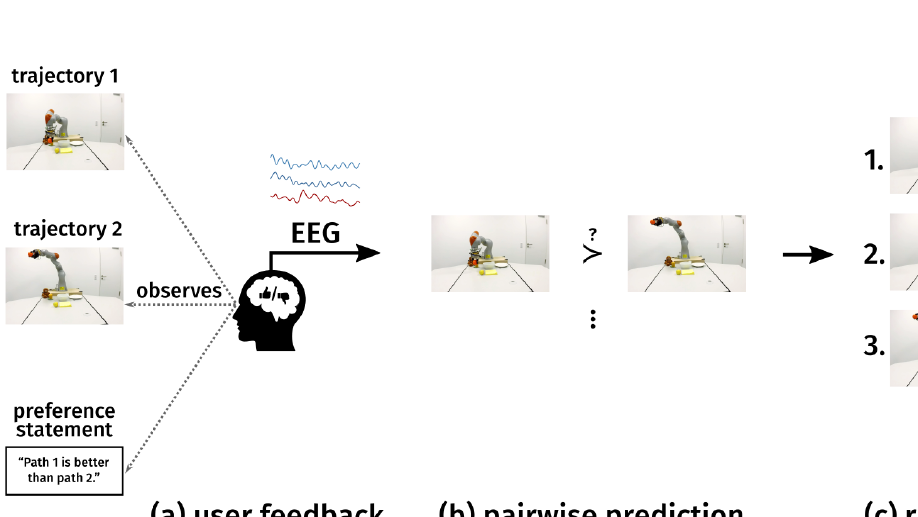

Learning User Preferences for Trajectories from Brain Signals

Robot motions in the presence of humans should not only be feasible and safe, but also conform to human preferences. This, however, requires user feedback on the robot’s behavior. In this work, we propose a novel approach to leverage the user’s brain signals as a feedback modality in order to decode the judgment of robot trajectories and rank them according to the user’s preferences. We show that brain signals measured using electroencephalography during observation of a robotic arm’s trajectory as well as in response to preference statements are informative regarding the user’s preference. Furthermore, we demonstrate that user feedback from brain signals can be used to reliably infer pairwise trajectory preferences as well as to retrieve the preferred observed trajectories of the user with a performance comparable to explicit behavioral feedback.

Heterogeneity of Event-Related Potentials in a Screen-Free Brain-Computer Interface

Interacting with the environment using a brain-computer interface involves mapping a decoded user command to a desired real-world action, which is typically achieved using screen-based user interfaces. This indirection can increase cognitive workload of the user. Recently, we have proposed a screen-free interaction approach utilizing visual in-the-scene stimuli. The sequential highlighting of object surfaces in the user’s environment using a laser allows to, e.g., select these object to be fetched by an assistive robot. In this paper, we investigate the influence of stimulus subclasses—differing surfaces between objects as well as stimulus position within a sequence—on the electrophysiological response and the decodability of visual event-related responses. We find that evoked responses differ depending on the subclasses. Additionally, we show that in the presence of ample data subclass-specific classifiers can be a feasible approach to address the heterogeneity of responses.

Influence of User Tasks on EEG-Based Classification Performance in a Hazard Detection Paradigm

Attention-based brain-computer interface (BCI) paradigms offer a way to exert control, but also to provide insight into a user’s perception and judgment of the environment. For a sufficient classification performance, user engagement and motivation are critical aspects. Consequently, many paradigms require the user to perform an auxiliary task, such as mentally counting subsets of stimuli or pressing a button when encountering them. In this work, we compare two user tasks, mental counting and button-presses, in a hazard detection paradigm in driving videos. We find that binary classification performance of events based on the electroencephalogram as well as user preference are higher for button presses. Amplitudes of evoked responses are higher for the counting task–an observation which holds even after projecting out motor-related potentials during the data preprocessing. Our results indicate that the choice of button-presses can be a preferable choice in such BCIs based on prediction performance as well as user preference.

Mining Within-Trial Oscillatory Brain Dynamics to Address the Variability of Optimized Spatial Filters

Data-driven spatial filtering algorithms optimize scores, such as the contrast between two conditions to extract oscillatory brain signal components. Most machine learning approaches for the filter estimation, however, disregard within-trial temporal dynamics and are extremely sensitive to changes in training data and involved hyperparameters. This leads to highly variable solutions and impedes the selection of a suitable candidate for, e.g., neurotechnological applications. Fostering component introspection, we propose to embrace this variability by condensing the functional signatures of a large set of oscillatory components into homogeneous clusters, each representing specific within-trial envelope dynamics. The proposed method is exemplified by and evaluated on a complex hand force task with a rich within-trial structure. Based on electroencephalography data of 18 healthy subjects, we found that the components' distinct temporal envelope dynamics are highly subject-specific. On average, we obtained seven clusters per subject, which were strictly confined regarding their underlying frequency bands. As the analysis method is not limited to a specific spatial filtering algorithm, it could be utilized for a wide range of neurotechnological applications, e.g., to select and monitor functionally relevant features for brain-computer interface protocols in stroke rehabilitation.

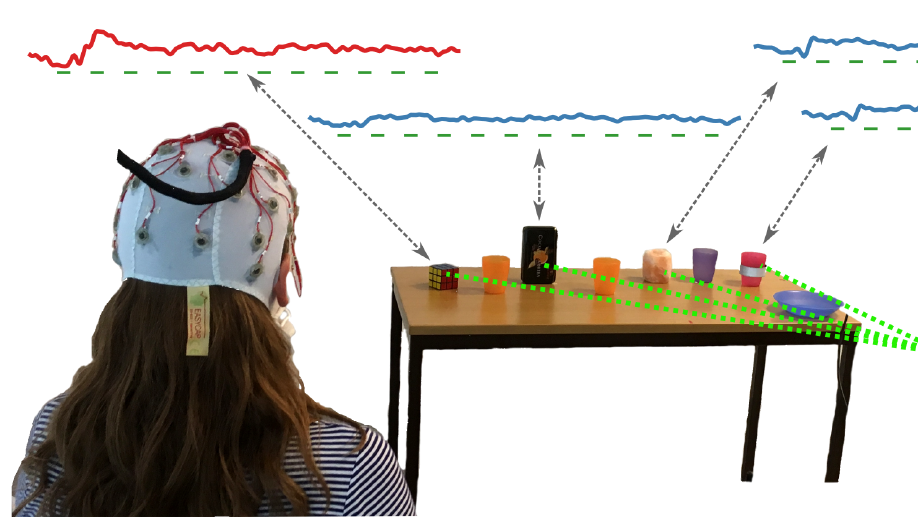

Guess What I Attend: Interface-Free Object Selection Using Brain Signals

Interpreting the brain activity to identify user goals or to ground a robot’s hypotheses about them is a promising direction for non-intrusive and intuitive communication. Such a capability can be of particular relevance in the context of human-robot cooperation scenarios. This paper proposes a novel approach to utilize the natural brain responses to highlighted objects in the scene for object selection. By this, it circumvents the need for additional interfaces or user training. Our approach uses methods from information geometry to classify the target/non-target response of these event-related potentials. Online experiments carried out with a real robot demonstrate an accurate detection of target objects solely based on the user’s attention.

Decoding Hazardous Events in Driving Videos

Decoding the human brain state with BCI methods can be seen as a building block for human-machine interaction, providing a noisy but objective, low-latency information channel including human reactions to the environment. Specifically in the context of autonomous driving, human judgement is relevant in high-level scene understanding. Despite advances in computer vision and scene understanding, it is still challenging to go from the detection of traffic events to the detection of hazards. We present a preliminary study on hazard perception, implemented in the context of natural driving videos. These have been augmented with artificial events to create potentially hazardous driving situations. We decode brain signals from electroencephalography (EEG) in order to classify single events into hazardous and non-hazardous ones. We find that event-related responses can be discriminated and the classification of events yields an AUC of 0.79. We see these results as a step towards incorporating EEG feedback into more complex, real-world tasks.

Decoding Perceived Hazardousness from User's Brain States to Shape Human-Robot Interaction

With growing availability of robots and rapid advances in robot autonomy, their proximity to humans and interaction with them continuously increases. In such interaction scenarios, it is often evident what a robot should do, yet unclear how the actions should be performed. Humans in the scene nevertheless have subjective preferences over the range of possible robot policies. Hence, robot policy optimization should incorporate the human’s preferences. One option to gather online information is the decoding of the human’s brain signals. We present ongoing work on decoding the perceived hazardousness of situations based on brain signals from electroencephalography (EEG). Based on experiments with participants watching potentially hazardous traffic situations, we show that such decoding is feasible and propose to extend the approach towards more complex environments such as robotic assistants. Ultimately, we aim to provide a closed-loop system for human-compliant adaptation of robot policies based on the decoding of EEG signals.

Evaluation of Interactive User Corrections for Lecture Transcription

In this work, we present and evaluate the usage of an interactive web interface for browsing and correcting lecture transcripts. An experiment performed with potential users without transcription experience provides us with a set of example corrections.